24 September 2020 RecSys for MAD: an empirical studyRecSys |

2.1 Experimental setup

As said in our previous post, our intent is to apply these methods to the MAD.

Our real-world ground to experiment on is a dataset of about 1.7 million executed trades in a period of 3 months (from 15-Aug-2019 to 15-Nov-2019). It includes a total of more than 200 distinct subjects dealing with a total of more than 5k distinct securities traded within more than 50 markets. Below an extraction of few records from such dataset just to give the idea.

| Executed contracts dataset example | ||||||

| SUBJECT | ISIN | QTY | PRICE | CNTR'VAL | CURR | TIMESTAMP |

|---|---|---|---|---|---|---|

| 1007120 | JP3672400003 | 1700.0 | 648.200 | 1101940.00 | JPY | 2019-08-15T00:03:00.061000Z |

| 1039910 | AU000000ANO7 | 579.0 | 5.300 | 3068.70 | AUD | 2019-08-15T00:05:22.805000Z |

| 1039910 | AU000000ANO7 | 392.0 | 5.300 | 2077.60 | AUD | 2019-08-15T00:09:06.733000Z |

| 1044976 | AU000000STM0 | 250000.0 | 0.030 | 7500.00 | AUD | 2019-08-15T00:14:34.038000Z |

| 1044976 | AU000000BOE4 | 100000.0 | 0.056 | 5600.00 | AUD | 2019-08-15T00:14:40.368000Z |

| 1043883 | GB00B03MLX29 | 30.0 | 25.100 | 753.00 | EUR | 2019-08-15T07:00:00.870623Z |

| 1021212 | FR0000124141 | 16.0 | 21.700 | 347.20 | EUR | 2019-08-15T07:00:01.731558Z |

| 1021212 | FR0000124141 | 10.0 | 21.700 | 217.00 | EUR | 2019-08-15T07:00:01.731558Z |

| 1021212 | FR0000124141 | 84.0 | 21.700 | 1822.80 | EUR | 2019-08-15T07:00:01.731558Z |

| 1021212 | FR0000124141 | 338.0 | 21.700 | 7334.60 | EUR | 2019-08-15T07:00:01.731558Z |

| 1021212 | FR0000124141 | 126.0 | 21.700 | 2734.20 | EUR | 2019-08-15T07:00:01.731558Z |

| 1021212 | FR0000124141 | 50.0 | 21.700 | 1085.00 | EUR | 2019-08-15T07:00:01.731558Z |

| 1021212 | FR0000124141 | 95.0 | 21.700 | 2061.50 | EUR | 2019-08-15T07:00:01.731558Z |

| 1021212 | FR0000124141 | 126.0 | 21.700 | 2734.20 | EUR | 2019-08-15T07:00:01.731558Z |

| 1056156 | DE000UNSE018 | 4.0 | 26.560 | 106.24 | EUR | 2019-08-15T07:00:02.730000Z |

| 1043883 | FR0000131104 | 48.0 | 39.690 | 1905.12 | EUR | 2019-08-15T07:00:03.204824Z |

The way we imagine the final product should work is as follows: a definite time window of past data is fed into the RecSys so that it can calibrate and make its own USERs and ITEMs representations. Future operations are then submitted to the calibrated RecSys: for each pair (USER, ITEM) that correspond to each occurring trade, the RecSys will return a score that can be interpreted as an affinity value or, more interesting for our purpose, as an anomaly score.

2.2 Hyperparameter selection

In order to find a suitable setup for the anomaly detection tool we were looking for, we had to proceed on two quite different levels which are common in machine learning and are usually called hyperparameter selection and model training.

Hyperparameter selection revolves around choosing and often engeneering from the dataset at our disposal those features which a RecSys model is built upon, namely the USERs, the ITEMs and the INTERACTIONs.

2.2.1 USER

The market player is by its very nature the preferred option for the USER, while several choices are available for the ITEMs, allowing one to set up different RecSys, each focusing on a different trait of the USER’s behaviour.

2.2.2 ITEM

A natural choice for the ITEM would be the ISIN, which is the unique code identifying a financial instrument. This would allow to sort out securities and subjects based on their mutual interactions. An alternative choice for the ITEM could be the countervalue of the executed order, thus making the model aware of the typical volume range of exercise of each USER. In this particular case where the selected dimension is a continuous quantity, one has to make use of a bucketing procedure in order to convert it into a categorical attribute belonging to a delimited and discrete list of items.

Elaborating further, one may construct new dimensions by combining the existing ones, aiming at discovering patterns in subjects’ behaviour that correlates over different dimensions. For example one may join the ISIN with some bucketing of the countervalue in order to explore subjects’ attitude where some kind of securities are traded in low volumes and/or in large amount, while other securities are traded in high volumes and/or in small amount.

2.2.3 INTERACTION

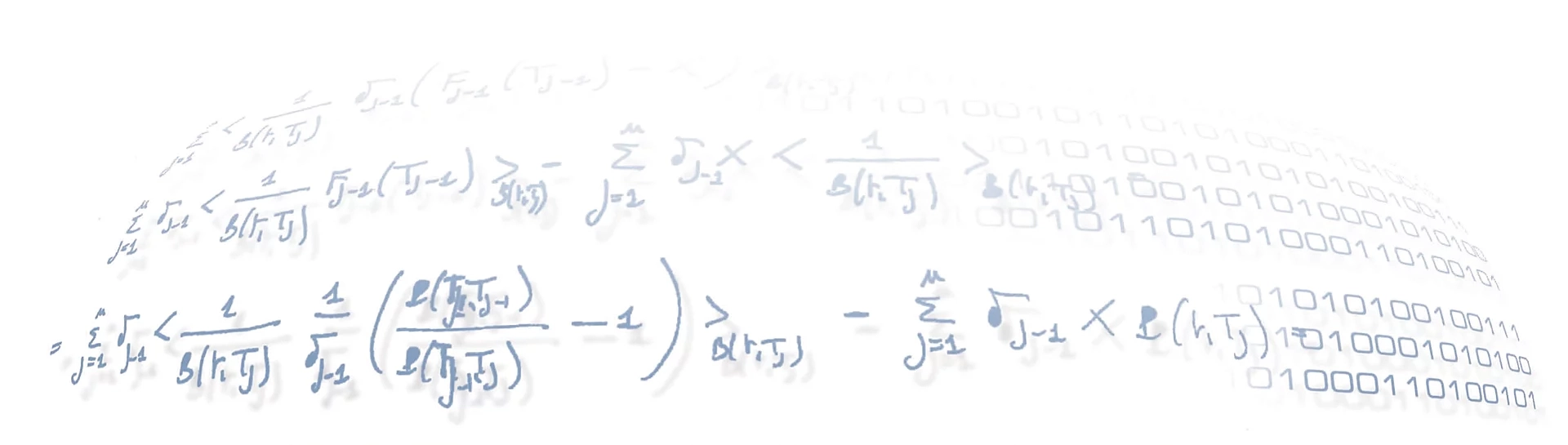

For each choice of the ITEM dimension, an appropriate metric needs to be chosen as the INTERACTION of the RecSys model. This can be a field or an engineered feature of the dataset that will be regarded as a sort of rating a given USER ascribes to a given ITEM. Here a non-categorical, quantitative dimension is favoured, so that a larger (smaller) value can be associated with a larger (smaller) rating. In our case a natural choice is the countervalue, since a larger volume of purchases clearly express a greater valuation for a financial instrument. In any case, even if a direct quantitative dimension is not available in the original data, a straightforward procedure to get a measure of rating can be achieved by just counting the occurrences of records in the original flat database when pivoting it through a group-by operation using the USER and the ITEM dimensions as a double-keys index. Well, in fact, even if you are willing to use as rating a direct quantitative dimension belonging to the original database, you still have to aggregate such values after the group-by since the RecSys needs a single rating score for a given (USER, ITEM) pair.

Actually the specific aggregation function used to build the rating can be quite critical on the effectiveness of the RecSys ability to capture the patterns in the users’ behaviours. For example, by using a simple aggregation function like count (or sum for a direct quantitative dimension) may results in a disproportional characterization of the population since there are very few USERs that make by far too many transactions (or that exchanges by far too much countervalue) with respect to the others.

A naif improvement could be to normalize such rating dividing it by the total count (or total sum of the countervalue). However this could still not be suitable enough: each of the few securities traded by a subject with a very small activity would result in a high rating for her, while on the contrary the many securities traded by a subject with a broad and scattered activity would each result in a very small rating for him.

It turned out that a better approach is to use the max, instead of the total, over each USER, for the normalization of the aggregation function. In this way, for example, if a sporadic subject traded an equal amount (either of countervalue or of number of deals) of a few securities, they will result in an equal rating for him, regardless of the total amount of activity. In a similar fashion, if a very active subject traded mostly and with a similar amount many different securities, they will result for her in a similar rating value not deflated by its own widespread presence.