09 February 2022 Market Abuse. Is it possible to reduce false positive alarms?RF MA |

The context: Market Abuse Detection

Market Abuse Detection (MAD) is the activity of monitoring the data flows of a financial marketplace (prices, orders and trades) with the aim of finding anomalous and suspicious behaviors of market participants. The MAD activity consists in looking for a set of patterns in the data that suggests whether a player tried to manipulate market prices or took advantage in negotiation by exploiting illegal or reserved information.

A list of rules, settled up by the Regulator in several normative, defines what is an anomalous pattern in the data flow. These rules are organized into six categories: Insider Dealing, Market Manipulation, HFT, Cross Product Manipulation, Inter-Trading Venues Manipulation and bid-ask spread.

Each pattern defines an algorithm, which is a metric, function of the market dataset (prices, volumes, order frequencies, executed trades, and so on). The metric has to be monitored and has to be limited to certain specific ranges of values. The overcoming of such thresholds must first be analyzed and if confirmed, can trigger a reporting to the Regulator. There are at least 50 algorithms, each with its specific thresholds to be set.

The activity of MAD is demanded to the market participants so that each market-institutional player has to monitor the data flow and, if the case, report abuses to the Regulator. In this context, institutional players must equip themselves with tools that, through the implementation of the normative patterns, are able to rise alarms if there is a threshold overcoming.

Since the quantitative value of a threshold is left to the player, there is always a big effort in fine-tuning each algorithm to avoid the rising of too many or too few alarms. Given that finding the best configuration can be difficult, those who have to monitor the alarms end up losing confidence in the monitoring tool due to presence of too many false positive alarms.

The following table represents the activity of the Compliance Officers of a tier one bank in Europe in about one year. About 360-K alarms have been generated in total, and among these the amount of “Closed” alarms is clearly huge with respect to the “Signaled” ones (i.e. the ones sent to the regulators). All these closed alarms can be seen as “false positives” raised by the system.

| Table 1 | |||||

|---|---|---|---|---|---|

| tipo% | Closed | Flagged | Signaled | Total | Closed% |

| Large daily gross 19.3% | 69855 | 52 | 125 | 70032 | 0.997 |

| Insider trading MAR 18.9% | 68442 | 78 | 199 | 68719 | 0.996 |

| Pair crossed trades 12.7% | 46028 | 0 | 4 | 46032 | 1.000 |

| Order frequency 5.9% | 21568 | 3 | 19 | 21590 | 0.999 |

| Painting the tape 6.0% | 21379 | 271 | 169 | 21819 | 0.980 |

| OTC off price 5.0% | 18155 | 2 | 0 | 18157 | 1.000 |

| Daily gross leading price move 4.7% | 16945 | 35 | 117 | 17097 | 0.991 |

| Daily net leading price move 3.6% | 13006 | 16 | 72 | 13094 | 0.993 |

| Smoking 2.9% | 10724 | 0 | 0 | 10724 | 1.000 |

| Unbalanced bid-ask spread 2.5% | 9134 | 0 | 0 | 9134 | 1.000 |

| Crossed trades 2.3% | 8202 | 1 | 322 | 8525 | 0.962 |

| Front running and tailgating 2.2% | 7995 | 0 | 0 | 7995 | 1.000 |

| Decreasing the offer 1.7% | 6330 | 0 | 2 | 6332 | 1.000 |

| Advancing the bid 1.7% | 6017 | 2 | 15 | 6034 | 0.997 |

| Spoofing 1.4% | 4803 | 389 | 4 | 5196 | 0.924 |

| Abusive squeeze 1.3% | 4538 | 1 | 69 | 4608 | 0.985 |

| Momentum ignition 1.3% | 4517 | 85 | 36 | 4638 | 0.974 |

| Marking the close 1.2% | 4301 | 10 | 75 | 4386 | 0.981 |

| Quote stuffing 0.9% | 3337 | 1 | 0 | 3338 | 1.000 |

| Creation of a ceiling in the price pattern 0.9% | 3195 | 0 | 6 | 3201 | 0.998 |

| Creation of a floor in the price pattern 0.8% | 2738 | 0 | 5 | 2743 | 0.998 |

| Attempted position reversal 0.8% | 2564 | 313 | 2 | 2879 | 0.891 |

| Deleted orders 0.5% | 1721 | 6 | 18 | 1745 | 0.986 |

| Position reversal 0.4% | 1271 | 21 | 0 | 1292 | 0.984 |

| Front running 0.3% | 1042 | 0 | 0 | 1042 | 1.000 |

| Wash trades on own account 0.3% | 976 | 3 | 0 | 979 | 0.997 |

| Improper matched orders 0.2% | 863 | 2 | 37 | 902 | 0.957 |

| Placing orders with no intention of executing ... | 422 | 0 | 0 | 422 | 1.000 |

| Pump and dump 0.1% | 271 | 8 | 6 | 285 | 0.951 |

| Front running and tailgating OTC 0.1% | 235 | 0 | 0 | 235 | 1.000 |

| Ping orders 0.1% | 232 | 1 | 0 | 233 | 0.996 |

| Inter trading venues manipulation 0.0% | 133 | 0 | 0 | 133 | 1.000 |

| Trash and cash 0.0% | 70 | 4 | 0 | 74 | 0.946 |

| Underlying leaded cross product manipulation 0.0% | 1 | 0 | 0 | 1 | 1.000 |

Machine Learning

Machine Learning (ML) methodologies are a natural direction in which to seek automated solutions to such kind of problems. If a supervised learning algorithm was able to learn autonomously the typical behavior of a user, it could be used to automate the closing of the false positive alarms. This would save a lot of time in the daily activity and would leave the focus only to those alarms that are worthy to be analyzed.

But why bother with machine learning? Should not be enough to better configure the thresholds in order to reduce the false positives? Not really. Consider that if one sets too much restrictive thresholds the alarm is not raised at all, and the compliance officer cannot put his experience to judge. Moreover, he will face the risk that, since no alarm is generated, he can miss and not signal a trade that effectively is a market abuse.

For these reasons, the compliance officer prefers to set thresholds that are not too much restrictive with the aim of minimizing the risk that some abuse is missed. The other side of the coin of this preference is that the system generates a lot of potential false positives, and these must be manually checked.

In principle one can say that the previous reasoning will apply also to a ML algorithm: why close alarms with ML if it is better to see them? The point is that ML is not an algorithm based on thresholds to be manually configured and carefully adjusted. ML learns from the past behavior of the compliance officer to classify the false positives, i.e. it learns the judgment a human being has expressed in the past about the alarms. The overall judgment process, which results from the combined use of algorithmic thresholds and ML, is then a mix of algorithms and human experience (the experience of the specific Compliance Officer) which is hopefully more effective than algorithms alone with restricted thresholds!

Methodology (Random Forest)

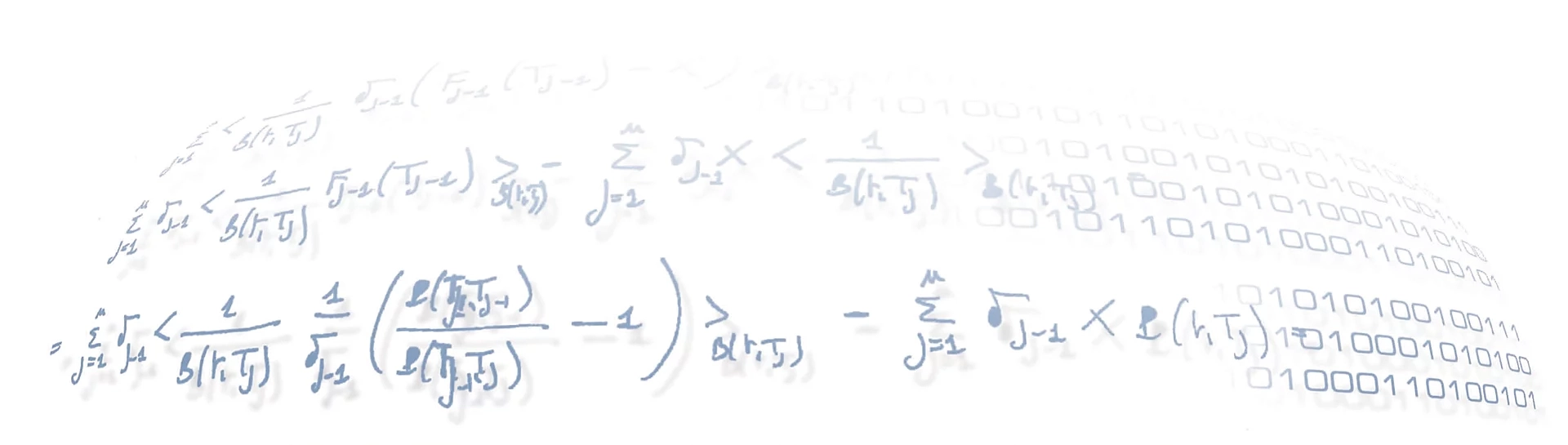

The problem of closing the false positive alarms can be configured as a canonical binary classification task (Duda at al. 2001). From the previous table we can see that we have three classes: Closed, Flagged and Signaled. The Flagged alarms are those requiring further investigations because they are doubtful and need the judgment of a more expert officer. Since we want ML to be able to recognize those alarms that are false positive “for sure”, in our setup we will consider the flagged alarms as signaled. In this way we end up with only two classes: Closed and Signaled, i.e. a binary classification problem.

For such kind of problems, where one has to find the right class for an item by looking at a set of its characteristics, a lot of ML methods are available, among which are:

- Naive Bayes

- Logistic Regression

- K-Nearest Neighbors

- Support Vector Machine

- Decision Tree

- Bagging Decision Tree (Ensemble Learning I)

- Boosted Decision Tree (Ensemble Learning II)

- Random Forest (Ensemble Learning III)

- Voting Classification (Ensemble Learning IV)

- Neural Network (Deep Learning)

Which algorithm to choose depends on the problem setup, on the dataset, on how much the dataset is unbalanced, and on the metric that is most relevant to measure the performance: accuracy, sensitivity, specificity, precision, independence of prevalence, dependence on prevalence. For an overview of the methods and the metrics associated with binary classification you can refer, for instance, to an in-depth guide to supervised machine learning classification.

In our problem we have to deal with a very imbalanced dataset, as you can see from the previous table. The Closed class is more frequent than the Signaled class by various orders of magnitude. This poses a challenging problem in the training of the algorithm. In fact, if one does not correctly manage the imbalance, he will train an algorithm that when questioned will always respond with the majority class (the Closed one). In this case, he will obtain a high accuracy but effectively a poor generalization capacity (high overfitting).

Another important point to consider in our classification task is that the risk of wrong classification is not symmetric for the two classes. On one side, there is the risk to classify an alarm to be closed when it is actually to be signaled. This risk can be defined as the probability $P(signaled|C=closed)$ and is the most severe. In fact, if the software classifies an alarm to be closed, the system will close it. There is no more chance for the compliance officer to change the decision if the software was wrong. This risk can cause severe effects in the relationship with the Regulator.

On the other side, there is the risk that the software classifies an alarm as to be signaled when it is actually to be closed</b>. This risk can be defined as the probability $P(closed|C=signaled)$ and is less severe than the previous one. Managing this risk is not a big issue because the alarm would not be closed automatically but would be automatically sent to the Regulator. A manual check of the compliance officer could always be done to confirm or reject the sentence of the ML.

Given the above considerations, we should definitively privilege a configuration of the classifier hyperparameters that can minimize the first kind of risk since this produces the most severe effects.

As a classifier we finally choose the Random Forest since it is the method that better allows to deal with an imbalanced dataset and overfitting. In the next post, you will see how we have managed the challenges previously described and how we have configured the training and the test of the classifier.

Stay tuned to see more insights!

References

Regulation(EU) No. 596/2014 of the European Parliament (MAR)

ESMA 2015/224 Final Report (technical advice on possible delegated acts concerning MAR)

Commission Delegated Regulation (EU) 2016/522 of 17 December 2015

Duda at al. 2001

Duda, Richard & Hart, Peter & G.Stork, David. (2001). Pattern Classification.