14 December 2020 Anomaly detection with HTM: Anomaly Score and Anomaly LikelihoodADTS AD |

3.1 Anomaly Score computation

In the last blog post we have shown how HTM networks continuously learn the spatio-temporal characteristics of their input data, and how they can make predictions of what will come next. However HTM networks do not directly model anomalies and do not output a usable anomaly score. In this blog post we will describe a technique for applying HTM to anomaly detection that was introduced by Ahmad, Lavin, Purdy and Agha.

Given the current input data $x_t$, $a(x_t)$ is the sparse vector representation of the current input, obtained through the learning algorithms (spatial pooling) of the HTM networks, while $\pi(x_{t-1})$ is the sparse vector representing the HTM network’s prediction (using the algorithm of time memory) at the previous time step.

Note that both $a(x_t)$ and $\pi(x_{t-1})$ are vectors with the same dimensionality (for example, a standard value is 2048).

We define the anomaly score $s_t$ as a scalar value given by

$s_t = 1 - \dfrac{\pi(x_{t-1}) \cdot a(x_t)}{\mid a(x_t) \mid}$ ,

where $\mid a(x_t) \mid$ is the scalar norm, i.e. the total number of 1 bits in the $a(x_t)$ vector, while the scalar product between $\pi(x_{t-1})$ and $a(x_t)$ represents the number of active columns at time $t$ predicted at the previous time step.

Thus the anomaly score represents the fraction of active columns at time $t$ that was not predicted at $t-1$.

One can manually set a threshold (e.g. 50%) so that every time the anomaly score is greater than such threshold, an anomaly is reported.

3.2 Anomaly Likelihood computation

The anomaly score described above represents an instantaneous measure of the predictability of the current input stream, and it works well for anomaly detection purposes in certain scenarios. In some applications, however, the underlying system is inherently very noisy and unpredictable and instantaneous predictions are often incorrect. To handle this kind of scenarios the anomaly score distribution is used to check for the likelihood that the current state is anomalous. The anomaly likelihood is thus a probabilistic metric defining how anomalous the current state is, based on the prediction history of the HTM model.

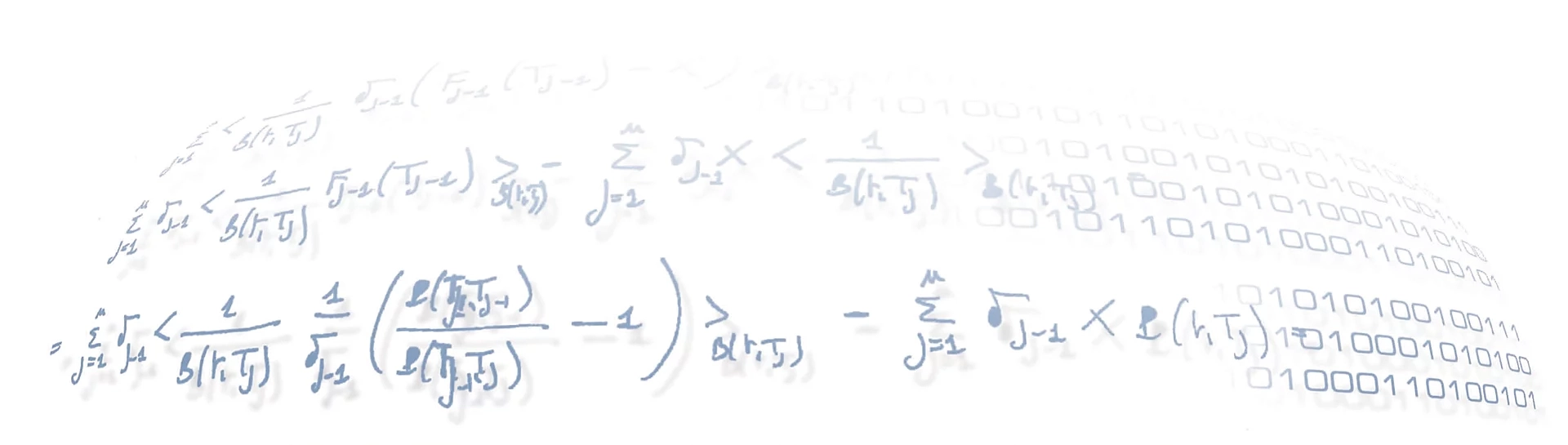

To compute the anomaly likelihood we consider a window of the last $W$ anomaly score values, and we model the distribution as a rolling normal distribution, where the sample mean $\mu_t$ and the variance $\sigma_t^2$ are continuously updated as follows:

$\mu_t = \dfrac{\sum_{i=0}^{i=W-1} s_{t-i}}{W}$;

$\sigma_t^2 = \dfrac{\sum_{i=0}^{i=W-1} \left( s_{t-i} - \mu_t\right)^2}{W-1}$.

Then we define the anomaly likelihood as the complement of the tail probability using the Q-function:

$L_t = 1 - Q \left( \dfrac{\tilde{\mu}_t - \mu_t}{\sigma_t}\right)$,

where $\tilde{\mu}_t$ is a short term moving average, defined as $\mu_t$ but on a shorter window $W’ \ll W $.

As above one can manually set a threshold in order to report an anomaly.

In this case it is useful to define a parameter $\epsilon$ such that an anomaly is detected if $L_t \ge 1 - \epsilon$. A reasonable value for $\epsilon$ is $\epsilon = 10^{-5}$.

These likelihood computations return probabilities near to 1 that often go into four 9’s or five 9’s, so a log value is more useful for visualization, thresholding, etc.. The likelihood is then transformed using this formula

${\rm logL}_t = \dfrac{\log(1.0000000001 - L_t)}{\log(1.0 - 0.9999999999)}$ ,

in this way the threshold $L_t \ge 1 - \epsilon$, with $\epsilon = 10^{-5}$, becomes ${\rm logL}_t \ge 0.5$.

In the next blog post we will show an application of anomaly detection using an HTM system on a real world dataset.